Is your Cloud platform secure by default? By Brett Hargreaves, Cloud Practice Lead at Iridium

Posted on 11th July 2022 at 13:15

Background

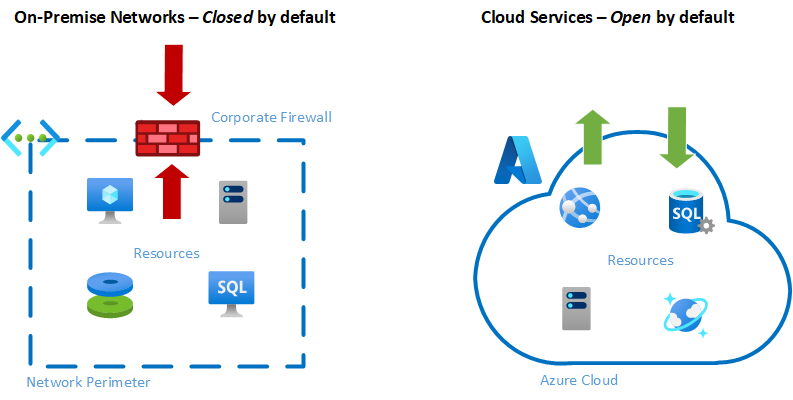

Many companies have internal and external security requirements for both regulatory and safety reasons. A common mistake made by many organisations is that they assume the same mindset when building Cloud applications as they do when building on-premises systems.

From a security perspective, this can lead to resources being deployed that are not as secure as believed, or simply non-compliant with an organsation's policies.

This comes from the fact that established enterprises have developed a strict and robust governance and review process for new systems, along with physical and technical security controls to protect internal systems. The most obvious of these is the corporate firewalls, which sit at edge of an on-premises network and control the flow of traffic both inbound and outbound.

As an on-premises network is wholly internal and external, access must be explicitly designed and configured. In other words, if no access is requested for a new system or application, the default will be for access to be blocked.

For cloud services, such as those built in Azure, many services are accessible by the internet by default. This includes web apps, virtual machines, storage accounts and, to a certain extent, SQL and Cosmos Db databases.

This means that network level security must be explicitly designed and built if external access to components is desired.

Network Versus Identity Security Controls

Although components in Azure, such as Storage and Databases, use keys to protected access to them, these keys only represent a single control. Modern Cloud-based systems should always adopt a ‘Security in Depth’ approach – this means that you should always assume at least one security layer can fail, and therefore you should employ controls in multiple areas to mitigate this risk.

As an example, in August 2021 an update to the Jupyter Notebooks feature of Azure Cosmos Db caused a flaw that lead to a Cosmos databases access keys being accessible WITHOUT authenticating to the Azure tenant.

For customers who had an open network policy and relied solely on access keys to protect their Cosmos Dbs, they were at significant risk of being compromised. However, if policies and standards were in place to restrict access to specific internal networks or addresses ranges, even if keys had been compromised, they would still be protected at the network layer.

In another example, a developer may inadvertently publish code that contained access keys to a public GitHub repository. Again, if network level controls are enforced, a malicious actor still cannot access the storage account even if they have obtained those keys.

Solution? Establish Secure Landing Zones

Many cloud providers now recommend the use of Landing Zones. These are standardized and automated platforms that ensure that your services are secure and compliant whenever any new service is deployed – without any involvement from an engineer – security rules should be applied by default, not exception.

Creating secure Landing Zones is essentially a two-part process. The architecture, or design principles, will dictate how resources in Azure should be configured and then tooling such as Azure Policies, Blueprints or pre-built CI/CD pipelines can be employed to enforce those principles.

We will first look at some example design principles, before then looking at technologies that we can use to automate them.

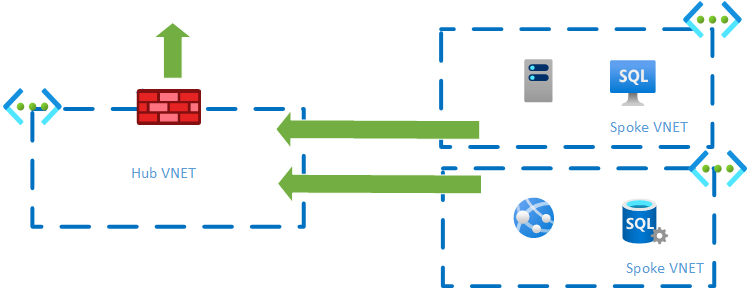

Design Principle 1 – employ a hub-spoke network model with a centralised firewall.

A hub-spoke network model enables a single point of egress, and/or ingress traffic.

For egress traffic you must deploy spoke VNETs that are peered to the hub VNET. Within the Hub you can then deploy either an Azure firewall, or 3rd party option.

User Defined Rules (UDR) must also be created in the spoke networks to force traffic out through the firewall.

The Hub Spoke model can also be used for ingress, however many Azure services, such as App Gateways or Azure Frontdoor, provide more advance firewall services for protecting applications from advanced attacks.

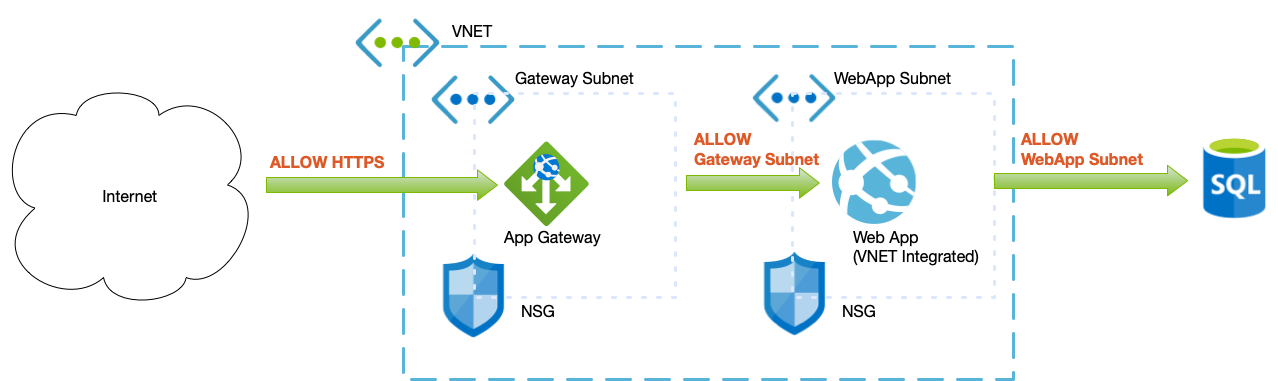

Design Principle 2 – Inbound public (web/https) traffic should always be via App Gateway / Front Door

All public traffic should be via an Application Gateway or Frontdoor service. Both these services include Web Application Firewall (WAF) capabilities for adding packet level inspection. By default WAF services are either disabled or set to audit only. For new services the WAF should be set to Audit for a period of 3 months and the logs monitored for issues. Once any identified issues are remediated the WAF should be set to block.

In order for a WAF to be effective, services should be configured to only allow traffic from the App Gateway/Frontdoor and not any other route. How this is achieved is different for each service, the following are some examples.

· App Services / Function Apps (on Premium plan)

o Enable VNET integration for internal & outbound comms

o Set Access Restrictions to restrict access from App Gateway / Frontdoor

· AKS Clusters

o Build AKS as internal clusters

o Control inbound and outbound access via NSG

Design Principle 3 – Restrict access to storage and Databases to only allow from internal IP ranges or VNETs

Storage and databases should have public access disabled and use VNET integration or Private Endpoints to ensure traffic can only originate from on-premises networks or App Services.

This way, our actual data is protected behind several layers of firewall rules and packet inspections. The addition of a WAF enabled App Gateway provides additional layers of protection by protecting against the top 10 application risks (know as OWASP) such as SQL Injection or Cross Site scripting.

This layered approach is a great example of security in depth as your backend data is protected by identity mechanisms, multiple layers of network firewalls (including Network Security Groups), and layer 7 packet inspection (the WAF).

Further Principles

The above are just some examples, there are of course many more, such as ensuring data is encrypted where possible, use of minimum TLS protocols, directing logs to analytics platforms or SIEMs and so on.

The point is that these rules should be defined, in conjunction with your CISO and risk teams, to ensure that you have considered and documented the required steps to keep your platform secure, as well as being able to evidence this to your compliance officers.

Automation Recommendations

Once you have established what your rules are, you must establish way to enforce them and, in regulated industries such as finance, show evidence of that enforcement.

A traditional on-premises mindset would use process to achieve this. Stage gates are often used to review and authorise the different stages of a solution – from design sign off to build sign off. This is often a manual task performed by the relevant stakeholders such as Architecture, Security and Risk, and Support teams.

Unfortunately, there are a few inherent problems to this. Firstly, you are dependant on full understanding and experience of the people signing off the solution. This can go one of two ways – either too lenient, in which case you put yourself at risk, or too restrictive, whereby sign off is frustrating and lengthy.

Another issue is that this simply does not align to modern development practices whereby releases are perform regularly and architectural choices are made within a sprint.

The ideal would be to codify these rules so that they can be more easily audited (i.e. ensure they have been performing), or better still, enforced – either by denying non-compliant configurations or automatically updating them.

Within Azure, we have different ways of achieving this – and often a combination can be used.

Automation Option 1 – Create standardized CI/CD Pipelines

One way to ensure compliance is to control the code that deploys a resource. For example, to enforce the use of a hub/spoke model, whereby specific routing tables, networking peering, and NSGs must be used, you could develop a pipeline that takes in some variables (an IP range) and then deploy it. This takes a lot of guess work out of the process, and creates consistency across your estate.

Automation Option.2 – Employ Azure Policies

Without policies, anyone can deploy services with non-compliant configurations. Azure Policies can be used to either report on or enforce the agreed security controls. Whether a control is reported on enforced is dependent on how the policies itself is setup.

In general, policies can be used in the following ways;

· Audit/AuditIfNotExists

The criteria is reported on. For Audit the item is reported on if it exists, AuditIfNotExists the item is report if it is not present. An example might be to check for the enablement of TDE on a SQL database. If TDE isn’t enabled and the policy is AuditIfNotExists then the component in question will be flagged in a non-compliance report in Azure Security Centre.

Auditing is an effective way of understanding the impact on your estate of policies without causing any detrimental effects (such as deployment issues). However, because there is no automated enforcement, any issues flagged must be manually remediated – this can take considerable time and effort depending on the size of your estate.

· Deny

The deny flag prevents any non-compliant resource from being deployed. For example, if you create a policy to enforce TDE, any attempt to either create, or update an existing component will result in a policy error.

Deny policies enforce your compliance, however if teams are not aware of the policy it can lead to confusion and delays in deploying new infrastructure.

Deny rules should be used when your engineering teams fully understand the design principles and how the policy prevention errors are surfaced.

· DeployIfNotExists

Componens will automatically configure a service as per the policy if the policy doesn’t already employ the required configuration item. For example, if the policy states that TDE is enabled, any attempt to deploy a SQL Database will automatically result in the database being deployed with TDE.

DeployIfNotExists Policies are an effective way of enforcing rules without the user knowing. They are particularly useful for enforcing configurations such as log routing, optional parameters and tags.

Through the use of Azure Policies, you can control nearly all aspects of Azure component configuration – regardless of what roles a user has. So if you have created a Deny Public IP policy, then even a resource owner (the most powerful role) cannot deploy a public IP object.

Policies are also great for deploying tags on resources – for example you can set a charge code or project tag and make sure this is always deployed to resources when they are created.

Automation Option 3 – Azure Blueprints

Azure Blueprints are another way to enforce standardised resources and configurations. In some ways they are similar to CI/CD pipelines, in that they are used to automatically deploy and configure resources within an Azure subscription.

The difference, however, is that they can be controlled and enforced – unlike CI/CD pipelines which can be edited either before or after deployment.

An example might be a Blueprint that when applied to a new Subscription, deploys a VNET with set parameters, route tables and NSG rules. The Blueprint can then be set to lock to prevent anyone or anything tampering with what has been deployed.

Blueprint also have separate roles for creating and publishing them, along with version control. In this way, and configuration can be created by the engineering team, but then must be signed off an published by a security architect before if can be used.

How to get started

Hopefully I’ve demonstrated some areas and tools to consider when securing your cloud resources. Unfortunately, it isn’t a simple and quick task, it takes planning and involves security, risk, support and engineering to devise a strategy that everyone is happy with and compliant.

Once the design is documented, you can then go about building the automation control to make sure that all your service become secure by default, whilst still empowering you development team to make changes as required.

Talk to Iridium

Our industry-leading Cloud Practice team works with businesses to understand their goals and establish tailored Landing Zones, allowing them to innovate, save money and optimise agility. Please don’t hesitate to contact Iridium’s Cloud Practice lead, brett.hargreaves@ir77.co.uk, with any Cloud questions or requirements. I also discuss this topic in greater detail in my book Exam Ref AZ-304 Microsoft Azure Architect Design Certification and Beyond.

Share this post: